How we mitigated product risk while building AI features

- March 7, 2024

- 4 min read

Building AI features is inherently risky — much riskier than traditional non-AI features. One of the reasons it’s so risky is there’s a chicken-and-egg problem: you can’t know the quality of an AI response without building the system to see an actual AI response.

In other words, you must build AI to learn what AI is valuable to build. How can a team crack that question without spinning its wheels or getting lost? A new approach is needed.

Over the past few months, we went from early AI ideas with low confidence to launching AI assist, our new tools that enable teams to build AI-powered in-product assistance into their product.

Here’s an example of AI Assist:

Through building AI Assist we learned 3 important product lessons for mitigating risk when building AI features that are different from traditional features:

- Prioritize technical research to learn what’s possible

- Quantify risk to select opportunities

- Building is the only way to know what to build

Here’s our story of building AI assist and what we learned:

Prioritize technical research to learn what’s possible

We had a lot to learn. We had a hunch there could be an opportunity to create features that would help our users build better product onboarding and education with AI, but we didn’t know how.

We felt like the opportunity AI presented was too big to ignore and the possible ROI justified the risk of sunk-cost research — a key strategic decision. We time-boxed technical research.

I spent about a week exploring product and design opportunities and we had an engineer spend two weeks dedicated to technical research to understand what was possible.

Our research ranged from:

- looking into open-source and closed-source models, tools, and AI providers

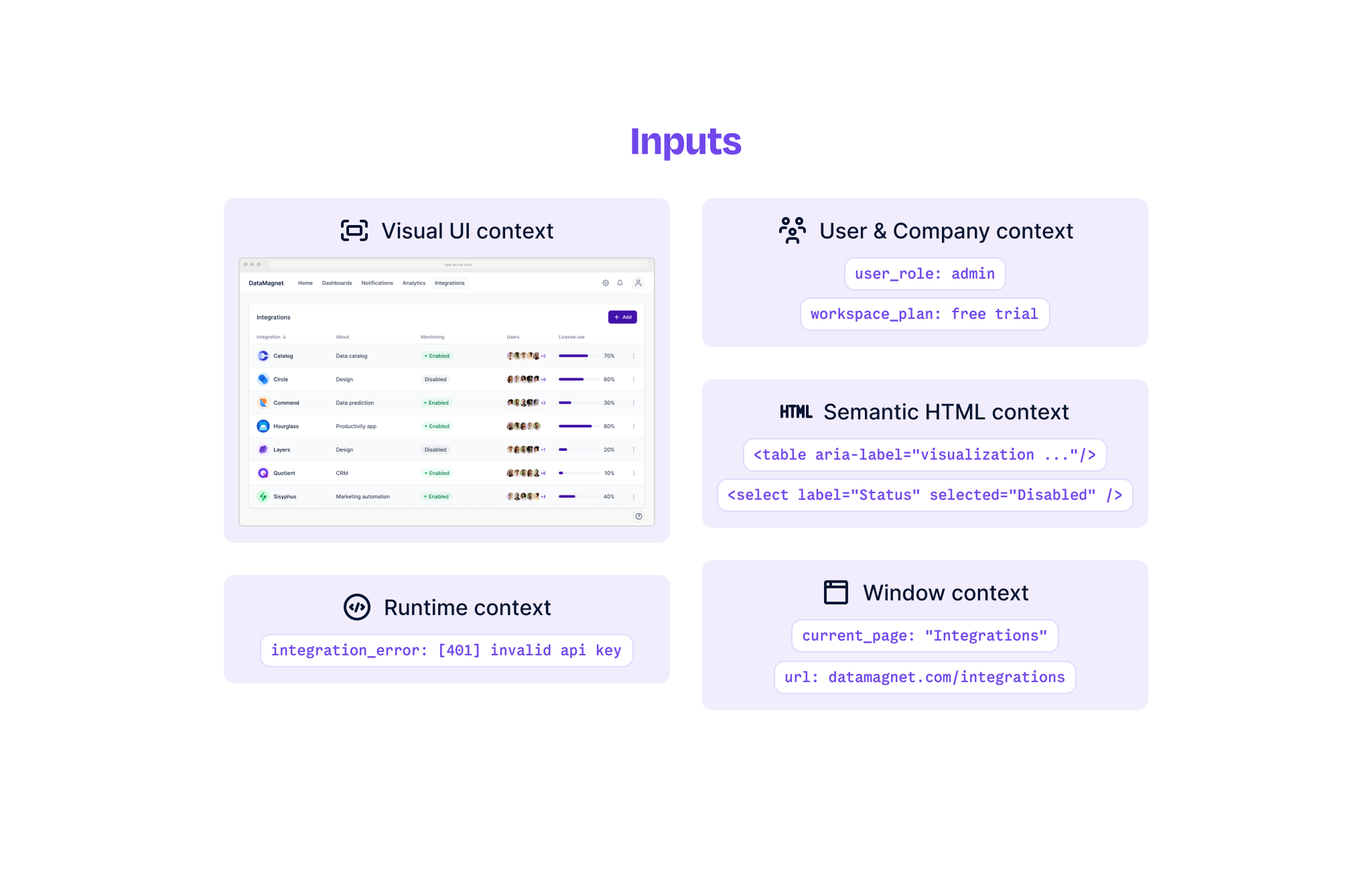

- investigating AI architectures that would support a wide variety of potential in-product contexts as inputs like HTML and screenshots of the UI to return more relevant results, a potentially uniquely valuable feature

- diving into research papers and newly released code and demos to build a feeling for the landscape of generative AI

Here’s some example research from Adept on using AI to understand UIs.

Many of these areas of research were not directly related to Dopt. That ended up being okay. We learned important foundational concepts.

Lesson: You have to make time for designers and developers to do technical research to learn what’s possible. Consider a spike or a time-boxed effort with key hypotheses or open questions. The research may end up being a sunk cost, you’ll have to be comfortable with that risk. However, the research will give direction to creating AI solutions grounded in reality.

Quantify risk to select opportunities

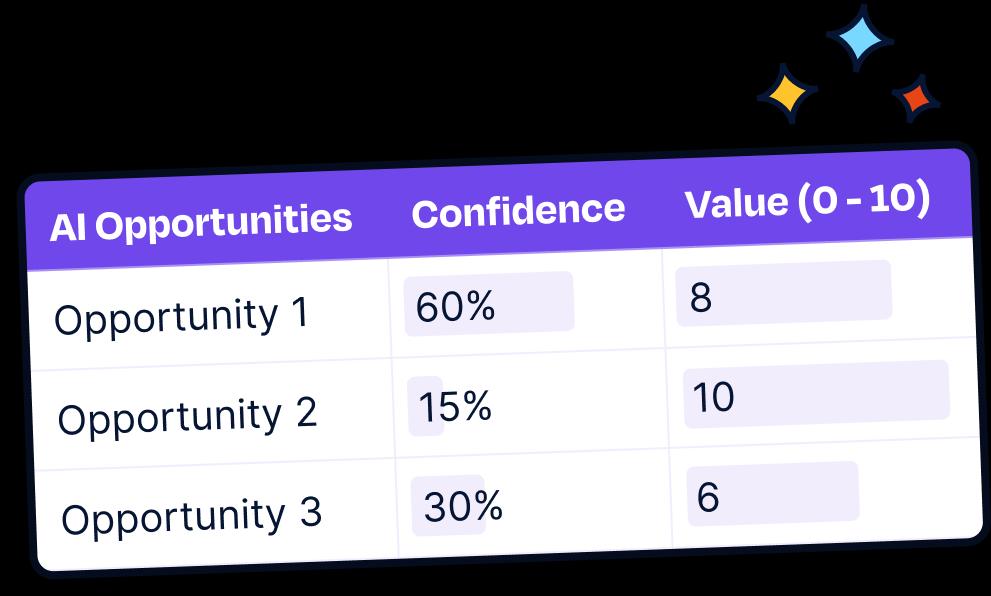

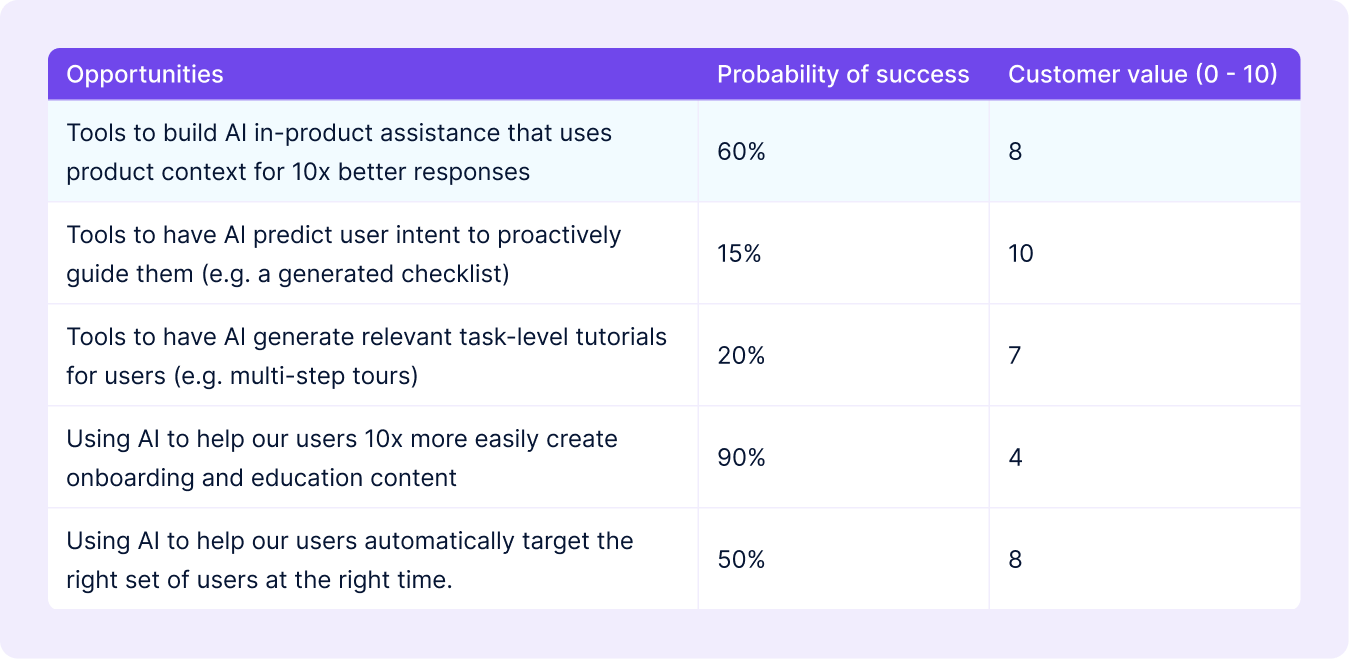

Armed with our research, we brainstormed possible AI opportunities and came up with some top contenders:

We considered two dimensions when deciding which opportunity we might build: the probability of success in a 3 to 6-month timeframe and the customer value if successful. The probability of success was determined by our research.

This probability-based approach grounded us. Building in-product AI assistants had the best mix of probability of success and customer value. With 60% confidence, we still had a reasonable level of risk and important open questions:

- Could AI reasonably understand in-product context like a UI screenshot or HTML?

- Would in-product context result in much higher quality, more relevant help responses?

- Could we return assistance in a reasonable time?

This was an important gating decision in the process: the possible customer value given the risk justified investing more time. Now we also had concrete open questions for the next steps of building.

Worth saying that it’s painful to see the other opportunities unsolved. But there are real constraints at a start-up — with limited resources, maniacal prioritization is the only way. At a larger company, I think a true portfolio approach for building AI features could work well.

Lesson: Unlike the traditional value vs effort input to prioritizing product work, AI requires a much greater emphasis on the probability of success. This requires real upfront technical discovery and assessing ROI as critical go/no-go gating decisions.

Building is the only way to know what to build

Traditional product bets are largely derisked with product and design work: what’s the problem and what’s a good design solution to the problem? There’s a fair amount of certainty a design will solve a customer's problem.

While still important for AI features, it’s not enough. You have to build parts of the AI feature to mitigate risk: is this solution even possible? Will the responses be of a reasonable quality, often enough?

We built an AI assist prototype to get the next level of confidence. The goal of our prototype was to give us confidence the AI model could return a response to a user that was actually helpful.

Our AI Assist prototype took about 2 full weeks of engineering time, which is fairly costly for us.

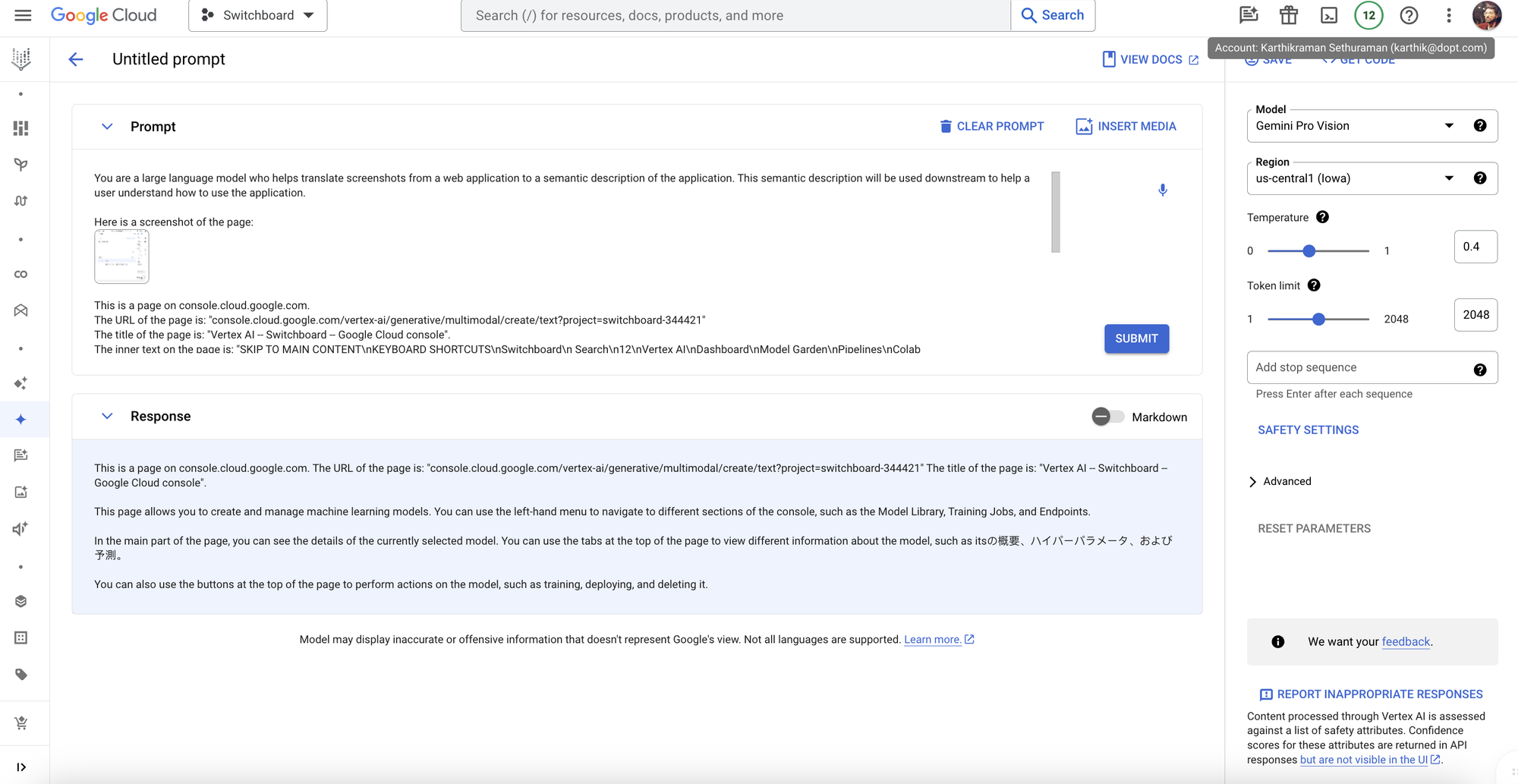

Here’s how we approached it: we decided our test product context would be collected from our own product for ease of collection.

I wrote about 20 different AI assist test use cases for Dopt, like user selects the environment dropdown, user selects a checklist block, and even user is on the flow page.

From there, our engineer leading the project, Karthik, created some lightweight code that eventually evolved into our current ai-assistant-client which could plug into our pages and generate the inputs like HTML snippets and UI screenshots given specific element IDs.

He created scripts that would interact with off-the-shelf AI providers to generate output and a customized model to work with our docs.

These steps enabled him to simulate our end-to-end AI pipeline that took the input and ran it through the custom model to get a response.

Karthik’s prototypes produced raw responses, but they were better than expected. They validated that in-product context was a useful input for generating helpful AI assistance.

The technical prototype was paired with design prototypes to understand how the two would work together.

We treated the results from the prototypes as another gating decision: our confidence in our ability to deliver amazing assistance using in-product contexts went way up — enough for us to continue building.

Lesson:

When building traditional products, you have high certainty a feature will work with a quality design and technical feasibility.

With AI features, you must iteratively build, assess confidence, and decide. You never have full confidence that “it will work”. Instead, you must build and prototype the feature to reduce risk and increase confidence in the probability of success.

As a founder or PM, this approach enables you to work through critical gating decisions: do you move forward with building the feature, or not?

Fully committing to building

We did enough prototyping that we were ~80% confident that AI assistance based on product context would work well enough. We could close the last 20% during execution with things like prompt engineering.

We’re still early in building AI assist and have more to learn, but going from 0 to 1 helped us learn how to mitigate risk for AI features by iteratively building to increase confidence. I hope it’s helpful for you as you build your AI features!

You can learn more about AI Assist and get started for free on our website.